Humanoid Diffusion Controller

Video

Lots of continuous high-difficulty motions, including the first Webster flip ever achieved on the Unitree G1, within a unified policy.

Continuous large-range downward chopping motions performed on the Unitree H1

Abstract

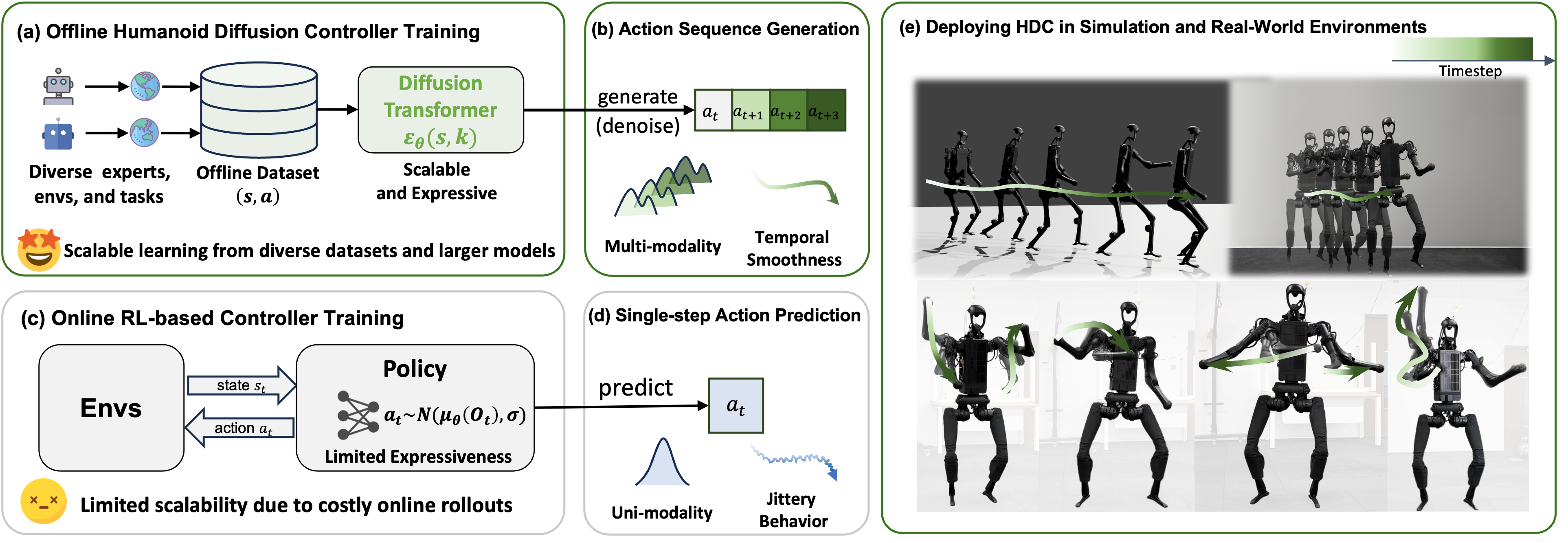

We introduce the Humanoid Diffusion Controller (HDC), the first diffusion-based generative controller for real-time whole-body control of humanoid robots. Unlike conventional online reinforcement learning (RL) approaches, HDC learns from large-scale offline data and leverages a Diffusion Transformer to generate temporally coherent action sequences. This design provides high expressiveness, scalability, and temporal smoothness. To support training at scale, we propose an effective data collection pipeline and training recipe that avoids costly online rollouts while enabling robust deployment in both simulated and real-world environments. Extensive experiments demonstrate that HDC outperforms state-of-the-art online RL methods in motion tracking accuracy, behavioral quality, and generalization to unseen motions. These findings underscore the feasibility and potential of large-scale generative modeling as a scalable and effective paradigm for generalizable and high-quality humanoid robot control.

Diverse Motions by One HDC model

Long-horizon Motions with Smoothness

High-dynamic Motions

Precise Motion Tracking

Method

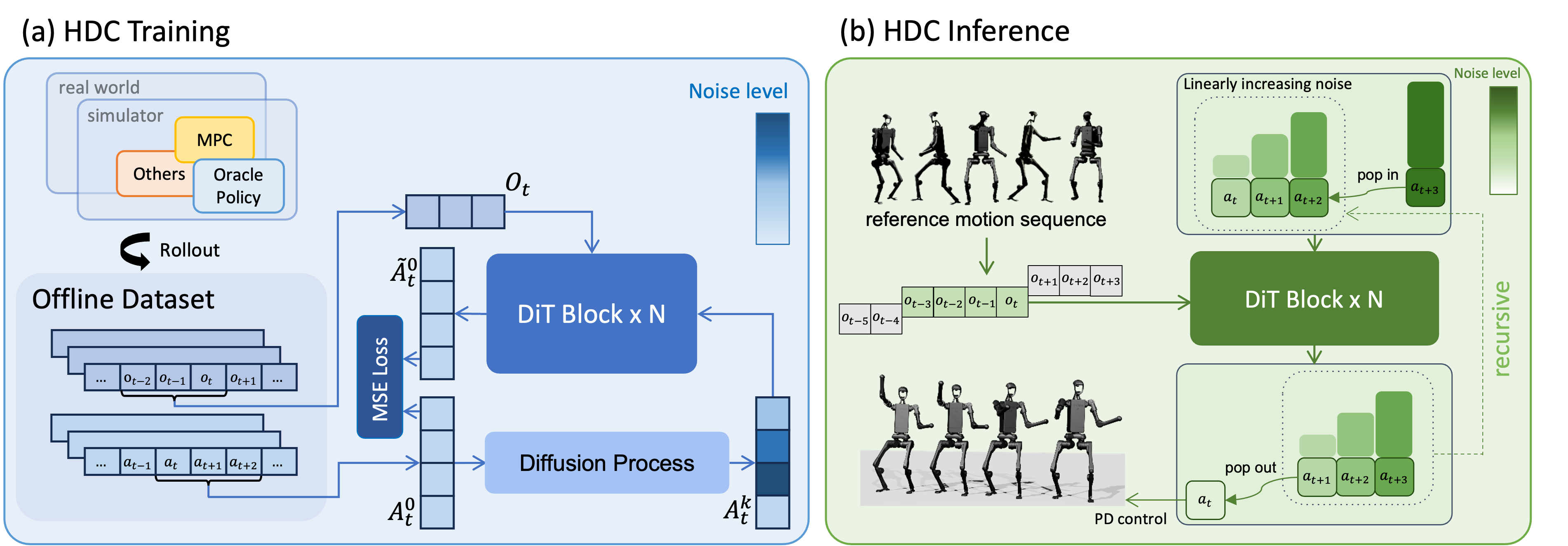

HDC training and inference. (a) During training, future action sequence and past observation sequence are sampled from offline data. Each action in is perturbed with a different noise level, and the DiT model, conditioned on , learns to denoise the entire action sequence.(b) During inference, each element in the action buffer is initialized with linearly increasing noise. The denoiser iteratively denoise the buffer until the first action becomes clean, which is then executed and removed from the buffer. A new purely noisy action is appended to the end, and the process repeats recursively.

Quantitative Results

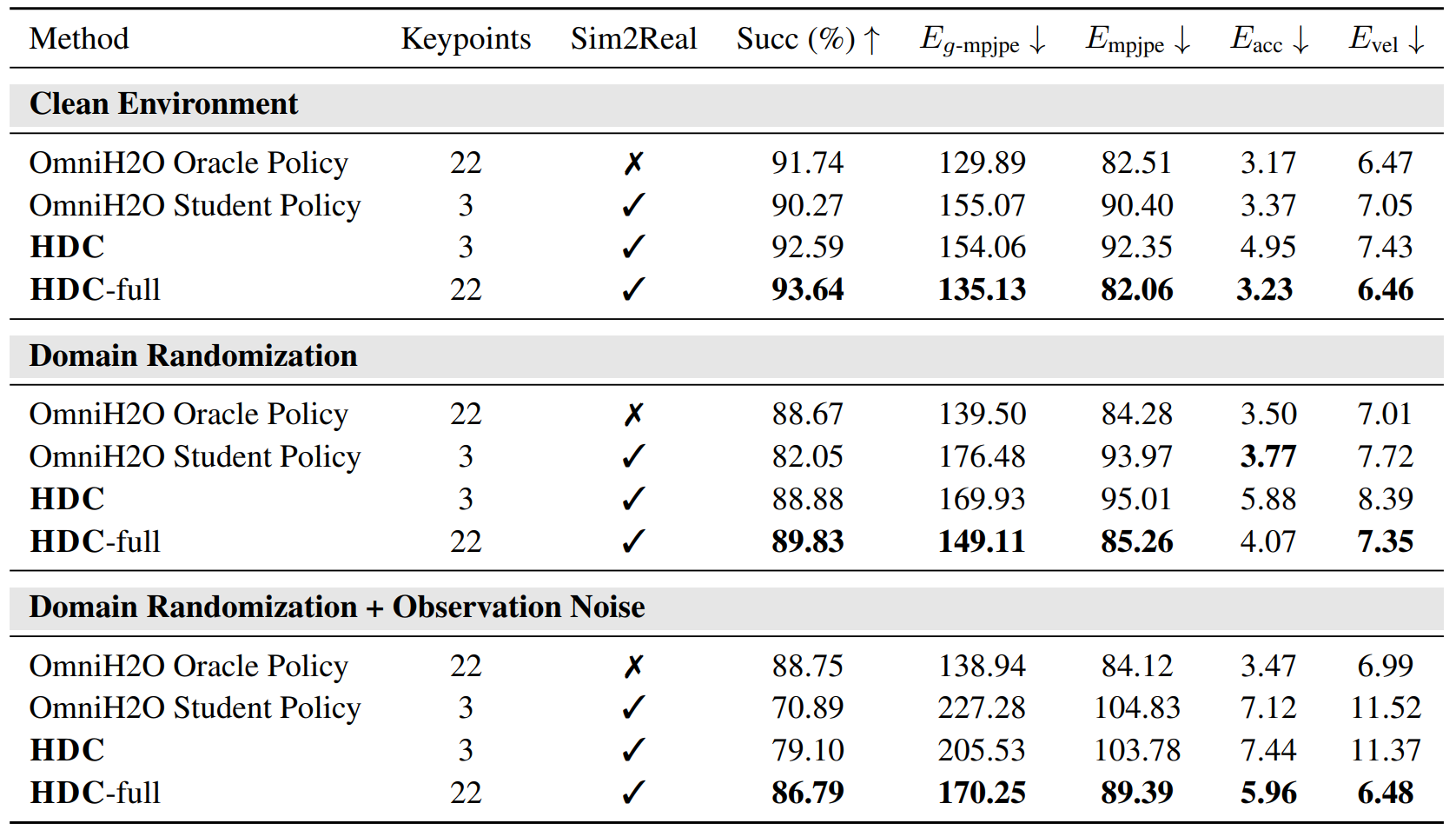

Comparison of HDC and baseline methods on motion tracking. HDC consistently outperforms the online RL-based baseline, demonstrating its capability to acquire high-dynamic control skills from offline data while maintaining robustness against dynamics uncertainty, observation noise, and compounding errors. Notably, HDC surpasses the oracle policy in terms of success rate, highlighting its strong multi-skill learning ability. We attribute this advantage to the model's high capacity and its ability to represent multi-modal action distributions, without being limited to a single behavior mode.

Citation

@article{

coming soon

}

powered by Academic Project Page Template